Meta has announced a suite of new features aimed at improving safety for teens and children on Instagram, with updates to direct messaging, nudity protections, and expanded safeguards for adult-managed accounts that feature minors.

New DM safety tools for teen accounts

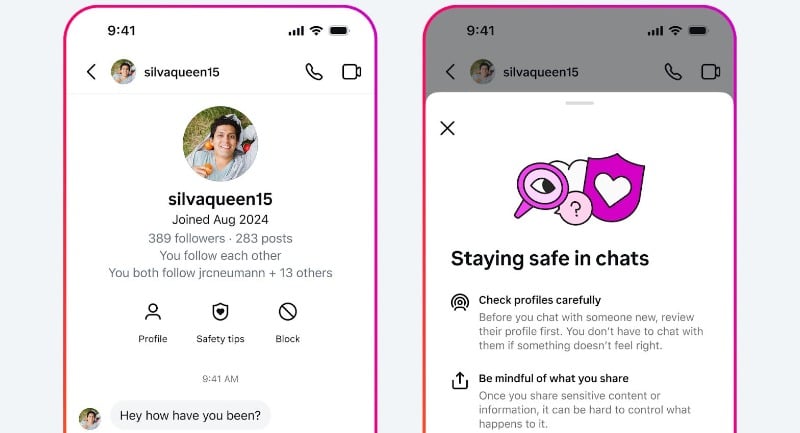

Teen users on Instagram will now see direct messaging safety tips and blocking options more prominently when starting new chats, along with details like when the other account joined Instagram. These additions aim to help teens better assess who they are interacting with and reduce the risk of contact from scammers or bad actors.

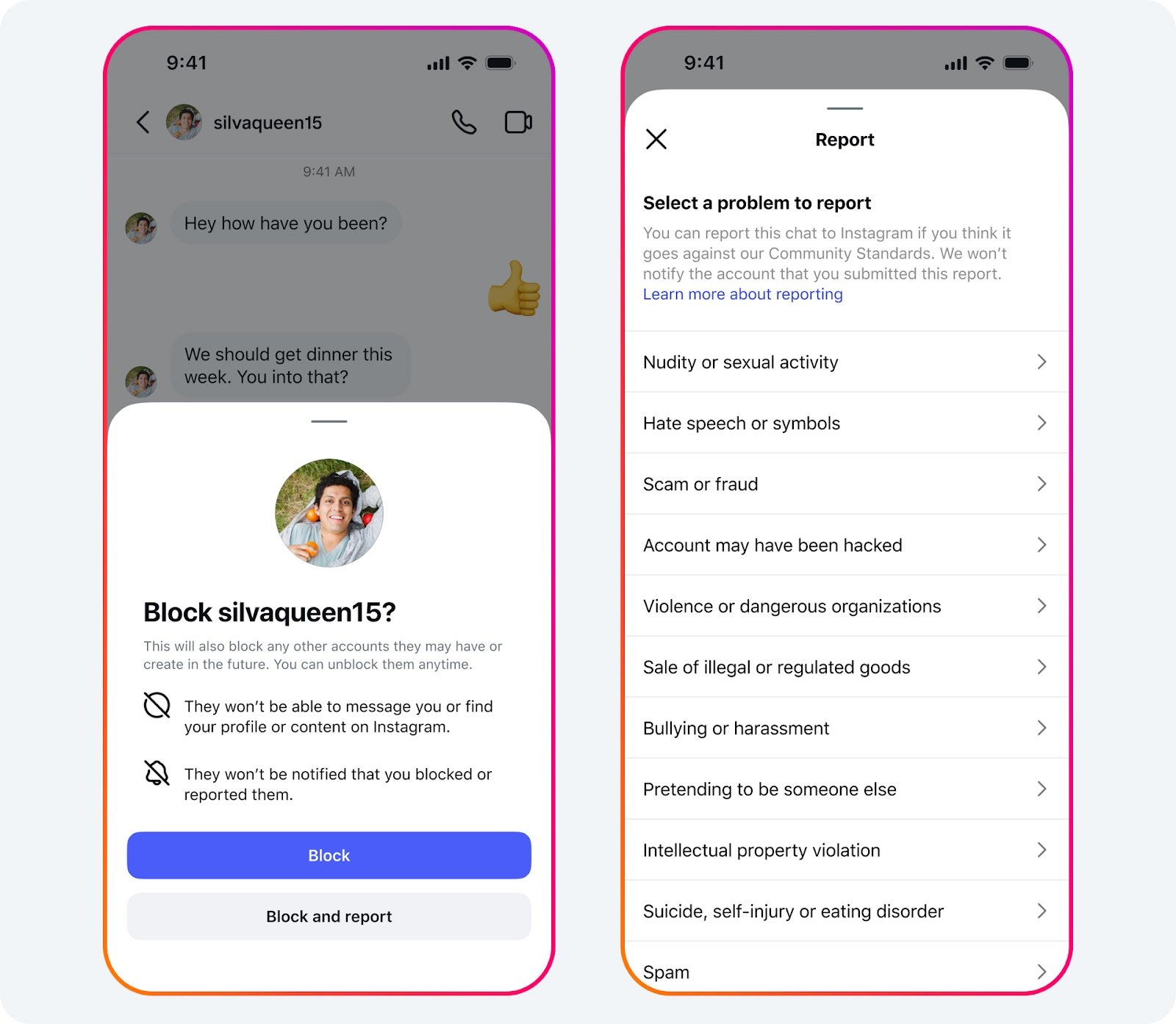

Meta is also rolling out a combined “block and report” function, enabling users to take both actions simultaneously. Previously, these were separate steps. In June, teens used existing Safety Notices to block accounts one million times and report another one million, indicating strong engagement with the tools.

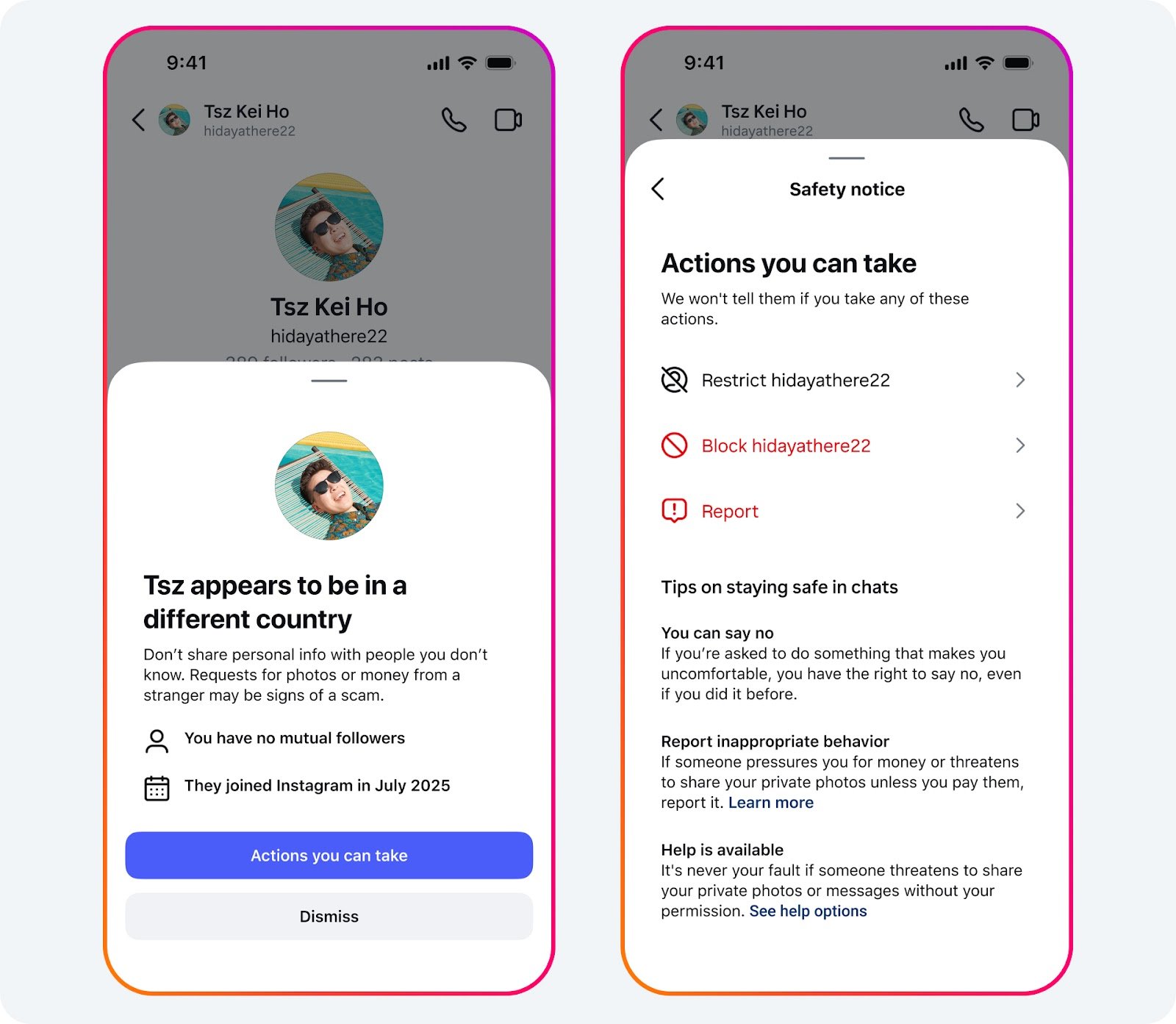

Location Notices, introduced earlier this year to flag potential location mismatches between users, were shown one million times last month. One in 10 teens tapped to learn more about staying safe, highlighting growing awareness of online threats like sextortion scams.

Nudity protection shows signs of behavioural change

Instagram’s default-on nudity protection feature, part of its broader effort to reduce unwanted exposure to explicit content, continues to be widely adopted.

Meta says 99 per cent of users have kept the feature enabled. In June, over 40 per cent of blurred images received in DMs remained unviewed. In May, 45 per cent of users opted not to forward blurred content after receiving a warning prompt.

Stronger protections for child-focused and managed accounts

Meta is also extending some teen protections to adult-managed Instagram accounts that prominently feature children, such as accounts run by parents or talent managers. These accounts will automatically adopt stricter message settings and enable Hidden Words to filter inappropriate comments.

Additional measures will make it harder for accounts flagged as potentially suspicious, such as those blocked by teens, to discover or interact with child-focused profiles through search, recommendations, or comment sections. Meta said these updates will roll out gradually in the coming months.

Earlier this year, the company removed nearly 135,000 Instagram accounts that had posted sexualised content targeting such profiles, along with an additional 500,000 Facebook and Instagram accounts linked to them. Meta shared this data with other tech firms via the Tech Coalition’s Lantern initiative to help combat cross-platform exploitation.

These moves form part of Meta’s broader commitment to protecting young users across its platforms by defaulting to safer settings and proactively detecting and acting on harmful behaviour.