Snapchat’s approach to enforcing Australia’s under-16 social media ban is facing fresh scrutiny, after The Sydney Morning Herald reported that children are easily bypassing the platform’s age verification checks.

The reporting comes just weeks after age-restricted platforms were required to demonstrate to the eSafety Commissioner that they had taken “reasonable steps” to prevent under-16s from holding accounts.

According to the publication, Snapchat relies on facial age-estimation technology from age-assurance provider k-ID. However, privacy safeguards mean the system does not cross-check whether the scanned face matches the age or gender details entered on the account.

In one example cited by the masthead, a 12-year-old girl successfully verified her Snapchat account by scanning her father’s face. The account was set up under a 30-year-old woman’s name, while the face shown was that of a man in his late 40s.

k-ID chief corporate affairs officer Luc Delany told The Sydney Morning Herald the system was intentionally designed to minimise data sharing.

“As part of this process, k-ID does not receive a user’s ‘declared age’ or ‘declared gender’,” Delany said. “This is an intentional design choice grounded in data protection and data minimisation principles.”

The outcome is a system that provides Snapchat with a simple “yes” or “no” signal on whether a scanned face appears to be 16 or older, without verifying whose face it actually is.

Snapchat remains the most widely used social media platform among 13- to 15-year-olds in Australia, with around 440,000 users in that age bracket, accounting for 5.3% of the total monthly audience of 8.3 million.

The reporting also noted that creating new Snapchat accounts remains relatively frictionless.

Trial accounts set up by the masthead using temporary email addresses and new phone numbers were not subjected to any age verification at all, unless prompted later by the platform.

Safety claims meet practical limits

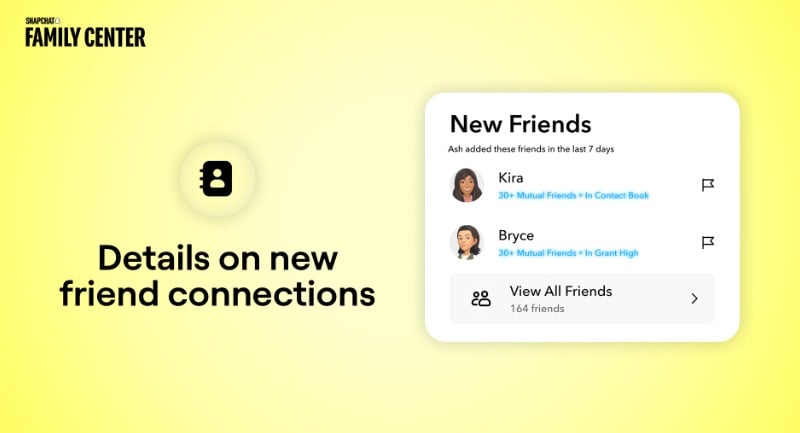

The revelations arrive as Snapchat rolls out new updates to its Family Centre tools, positioning the platform as offering parents “greater visibility” into how their teens use the app.

Announced this week, the new features allow parents to view the average time their teen spends on Snapchat, how that time is divided across features such as chat, camera, and content discovery, and to add context around new friends, including shared contacts or mutual friends.

I suppose that means you’ll know how long your teenager has been messaging their new 45-year-old male friends.

Snapchat said the updates were designed to support “more informed conversations” between parents and teens, while continuing to respect privacy by not granting access to private messages.

Family Centre was introduced in 2022, with Snapchat stating that strong safety settings are enabled by default and that the tools reflect “real-world family dynamics”.

Yet the Herald reporting highlights a tension between these safety assurances and the practical reality of enforcing age restrictions at scale.

Parents will be able to see the username, avatar, and mutual friends of the 45-year-old men their kids are chatting with

A Snapchat spokeswoman told the masthead the company had raised concerns during the policy debate about how platforms could realistically prevent under-16s from accessing services.

“We continue to believe there are better solutions to age verification that can be implemented at the primary points of entry, such as the operating system, device, or app store levels,” she said.

In the meantime, Snapchat says parents can report accounts belonging to under-16s, which will then be suspended, despite previous complaints from parents about delays and limited responses to reports.

Regulators watching closely

eSafety Commissioner Julie Inman Grant confirmed earlier this month that all 10 age-restricted platforms were technically compliant with the legislation as of January 16.

Prime Minister Anthony Albanese has said more than 4.7 million accounts have been removed across age-restricted platforms since the ban came into effect.

However, The Sydney Morning Herald reported that many children hold multiple accounts across several platforms, often creating new profiles to replace those that are removed.

An eSafety spokesperson told the masthead that the regulator is actively engaging with platforms and age-assurance providers, including Snapchat and k-ID, to examine any weaknesses in their implementation.

Under the Online Safety Act, responsibility for compliance sits with platforms rather than parents or users, with potential penalties of up to $49.5 million for breaches.

For now, the gap between how safe platforms say they are and how they operate in practice remains an open question.

Mediaweek has reached out to Snap for comment.

Keep on top of the most important media, marketing and tech news with the Mediaweek Morning Report.