Australia’s under-16 social media ban may be reshaping how young people spend time online, but it has not removed them from digital risk.

Teenagers are not simply bypassing the ban. Many are already using platforms and other digital spaces not included in the ban’s checklist, some of which experts argue may be far more dangerous.

One of the most overlooked areas of exposure is gaming. While often viewed as separate from social media, gaming can expose teens to harmful content, cyberbullying in multiplayer and battle royale formats, and heightened risks of predatory online behaviour, particularly when younger players interact with older demographics.

Roblox has rolled out certain age-restriction features, including built-in facial recognition, designed to prevent minors from chatting with adults and to comply with the intent of the ban loosely.

However, even with these facial recognition technologies, questions remain about their reliability.

There are also many other gaming platforms and communication tools that could see increased use as a result of the ban.

Although they are often discussed together, gaming and social media are not treated the same way under the legislation.

Algorithms vs live chat

Tech expert and commentator Trevor Long said the legislation has failed to keep teenagers off social platforms and may be pushing attention towards gaming environments where different, and in some cases more serious, harms exist.

“In this case, they’ve explicitly separated them at the legislative level. Gaming has had a clear exemption from the start,” Long told Mediaweek.

Trevor Long

Long added that expectations of the ban’s effectiveness were low from the outset, mainly due to weaknesses in age verification.

He also acknowledged the risks associated with both social media and gaming but stressed that they are fundamentally different.

“They’re very different risk profiles,” he said.

On social media, the risk is being sucked into algorithms, going down rabbit holes, and being exposed to negative commentary on personal content.

In gaming environments, the concern is less about content feeds and more about who children are interacting with.

“In online gaming, the primary risk is predators. There’s some bullying risk, but the main concern is how easily predators can target children. That’s the risk the eSafety Commissioner often raises,” Long said.

That distinction, he argues, should change how policymakers approach regulation.

Why addiction may be the wrong focus

While addiction is frequently cited as a reason for tighter platform controls, Long is sceptical that it should be the driving force behind gaming regulation.

“My personal view is that this has been a very structured campaign under the banner of ‘let kids be kids.’ In this situation, we need to let parents be parents,” he said.

“If your child is addicted to gaming, you need to get them off games. If they’re addicted to their phone, you need to get them off their phone.”

Rather than broad bans, Long believes simple and targeted safeguards could significantly improve child safety in gaming environments.

“The absolute bare minimum should be that kids can only talk to kids, like the Roblox model,” he said. “That’s a strong example of how gaming safety could work and would significantly improve protections.”

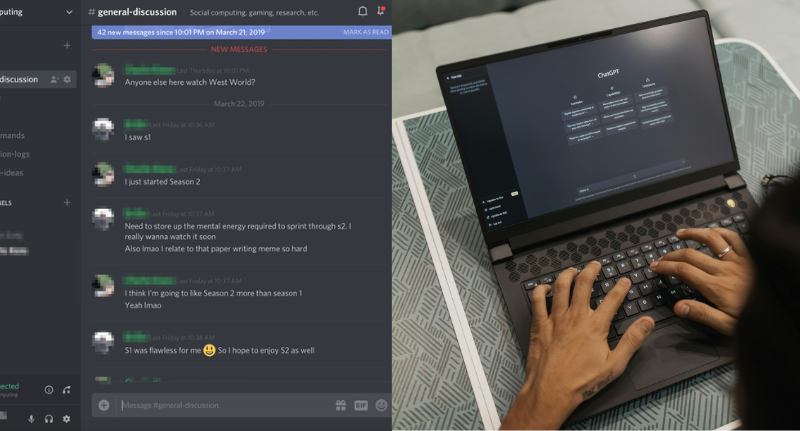

Discord and chatbots the new meta?

One unintended consequence of the social media ban is the likelihood that children will migrate to platforms with fewer protections and less oversight.

“The most urgent one is Discord,” Long said. “It’s a cesspool of chat groups and conversations.”

The free communication app is closely tied to gaming culture, having been built primarily for that purpose.

Without parental intervention, it can expose young users to not-safe-for-work content or communication with unknown adults.

“It does have benefits for some communities, but the potential for harm is enormous. It can enable bullying and predatory behaviour,” Long said.

“There’s no algorithm, but it can still function as a rabbit hole of groups. It needs stronger monitoring, if not inclusion in regulation.”

AI-powered chatbots and tools have also been left out of the regulatory conversation, despite growing concerns about their impact on young users.

“Should kids have access to chatbots? Maybe not. But they’re nowhere near being part of this regulation,” he said.